The digitization of paper documents holds enormous potential for businesses. As optical character recognition (OCR) technology advances, companies can digitize documents faster and more accurately than ever, allowing software to search, sort, and analyze the data to deduce valuable insights. However, a capable OCR solution alone is not enough – effective pre- and post-processing is also needed.

In this article, we will explore the current state of OCR, how machine learning augments it, and how it helps optimize business processes.

What is OCR?

Optical character recognition (OCR) technology converts text from images into machine-readable data. It is used for fast and accurate extraction of data from various document formats.

Although the term OCR is widely known, the technology itself is a black box for most. Before diving deeper into the technical details, it’s worth noting the various subcategories of OCR and their differences. The following four approaches all fall under the umbrella term OCR:

- Optical character recognition (OCR proper): Single typewritten characters

- Optical word recognition (OWR): Whole typewritten words

- Intelligent character recognition (ICR): Single typewritten or handwritten characters, utilizes machine learning

- Intelligent word recognition (IWR): Whole typewritten or handwritten words, utilizes machine learning

Depending on your use case, you may need different types of software, with or without machine learning capabilities.

There’s also the difference between document text and scene text.

| Text form | Characteristics | Examples | Considerations for OCR |

| Structured document text | Data is organized in a predefined, consistent format | Receipts with defined fields, forms, invoices, identity documents | Easy to process, OCR can rely on layout analysis and field extraction |

| Unstructured document text | Without a fixed or consistent format, lacking a predefined structure | Letters, reports, articles | Harder to process, OCR must handle continuous text and diverse layouts, focusing on text recognition without strict field boundaries |

| Scene text | Text in real-world outdoor and indoor environments | Street signs, billboards, vehicle plates | Hardest to process: varies greatly in shape, angle, contrast, and font |

It’s thus best to ask yourself beforehand what texts you will perform data extraction on, so you can choose an appropriate software solution.

OCR workflows

Whether with or without machine learning, OCR is often embedded in a workflow that includes both pre- and post-processing.

Image preprocessing

Before the software begins scanning a document for characters or words, the input image will need some preparation. By optimizing the source image, you can achieve better end results.

Three of the most important adjustments concern angle and contrast.

- Skew correction: Even if captured with a flatbed scanner, scanned paper documents are often skewed at a slight angle. Correcting this makes it easier for the OCR software to establish text baselines and word boundaries.

- Image filters: Filters can be applied to remove artifacts from the image background, optimally preparing the input image for processing.

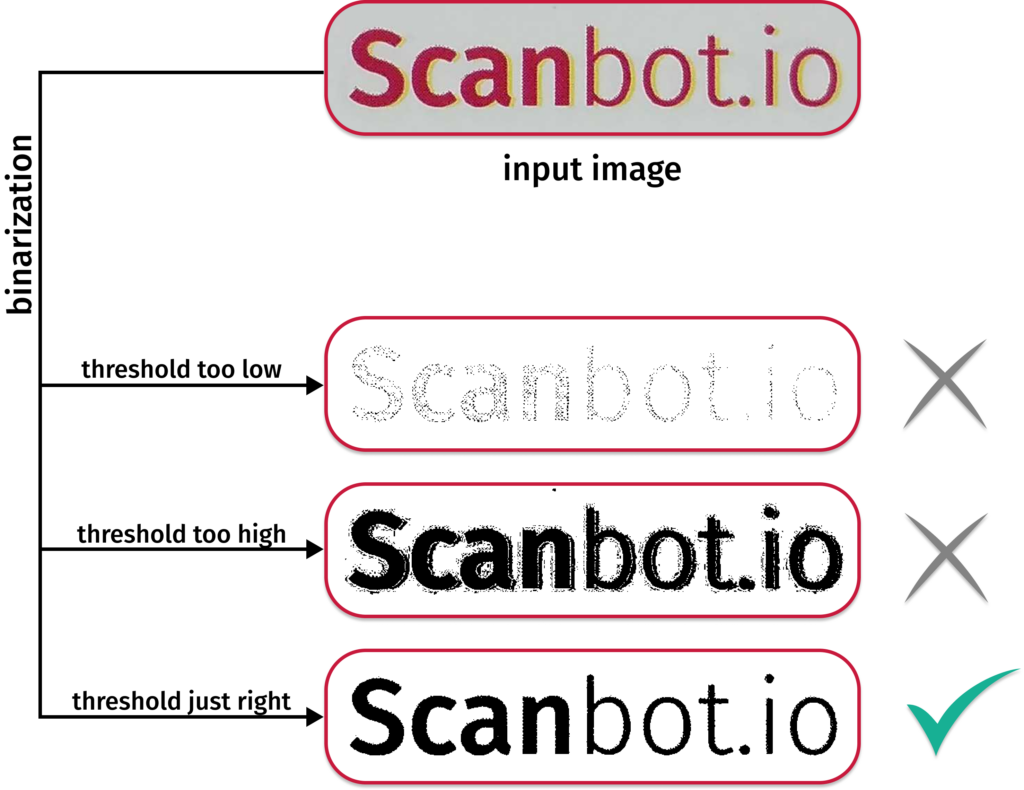

- Binarization: The document is reduced from color or grayscale to plain black and white, maximizing background contrast.

Text output and postprocessing

After capturing the entire document, the OCR software needs to output the text in a usable digital format. There are several options for this. The simplest way is a plain text file with all information in a single block of text, without any line breaks or layout. This may be viable for very short texts, but less so for larger documents or complex forms.

Some tools offer to generate a Word file that mimics the formatting of the input document. However, the result is often less than perfect and may be hard to edit.

Another option is to overlay the recognized text as an invisible layer on top of a PDF file of the input image. This maintains the look of the original, but text can still be highlighted and searched for in the file using just a PDF viewer.

How machine learning improves OCR capabilities

The purpose of extracting data from a document with OCR is to eliminate manual data entry in favor of automatic processing. By augmenting the process with deep learning technology, you can achieve more accurate results.

Let’s assume, for example, that you want to automatically extract text from invoices. Invoices are structured documents – but only to a degree. Although you know that they all contain certain pieces of information, the location of, say, the date or the amount will vary across documents. Less sophisticated OCR engines may not be able to reliably extract this data.

A machine learning model can be trained to recognize data types such as addresses and account numbers, and label them accordingly for automated data extraction as key-value pairs, which can then be used for automated data processing.

Deep-learning-assisted OCR is also crucial for scene text recognition. For instance, you might like to automatically register vehicles entering your company premises using a license plate scanner. In this case, OCR technology alone is not enough. Instead, you will need a trained model that first recognizes and captures the license plate, then applies filters and other adjustments to the image for better processing, reads out the characters on the plate, and finally saves them in a machine-readable format.

Implementing OCR to optimize business processes

Many companies are trying to reduce the amount of paper documents going around. One strategy is to scan every incoming paper document, file the scan electronically in a document management system, so everyone who needs it has access to it, and then immediately archive the paper version.

This approach – storing documents as plain image files – has several serious shortcomings that can be improved by applying OCR:

- Limited searchability: Image files are difficult to search for specific text. OCR can dramatically shorten the time spent retrieving documents and finding the needed information.

- No data extraction: Image scanning alone provides no usable data for analysis or automation purposes. Without OCR, businesses miss out on insights and efficiencies.

- Poor scalability: Advanced document management systems scale well, as they offer search, sharing, and version control capabilities. A system based on document images is far less efficient.

- Limited readability: Damaged, faded, or poorly scanned documents are less readable, and thus less useful. Modern OCR scanning solutions can minimize these defects.

You no longer even need expensive hardware to benefit from OCR. Thanks to advances in their processing power and in image processing technology, ordinary smart devices can become enterprise-grade mobile OCR scanners with OCR software.

Leverage OCR for automated processing with mobile data capture technology

Today, you can turn documents into searchable PDFs with a simple scan, thanks to the Scanbot OCR SDK. Or extract data from structured documents, such as ID documents, with our specialized Data Capture Modules.

Through years of collaboration with our customers, we have developed a deep understanding of just how crucial image quality is for OCR. That’s why the Scanbot Document Scanner SDK comes with extensive usability and image enhancement features. User guidance, deskewing, automatic cropping and other functions simplify the scanning experience and improve output quality.

With the Document Scanner SDK, industry leaders like Morgan & Morgan were able to establish an OCR workflow suitable for automated document processing. Thanks to the high-quality input images, the law firm’s document management system can now use OCR to classify documents and extract information.

See for yourself how the Scanbot SDK enables reliable automatic data extraction and try our free Data Capture Module demo apps.

If you want to discuss your use case, message our solution experts at sdk@scanbot.io.