With the rise of highly optimized AI models that run locally on your smartphone, on-device processing has moved into the spotlight. The convenience of using AI applications offline is not the only reason to cut the cloud connection – crucially, it also ensures security and confidentiality.

As a company providing scanning SDKs, we have internalized the value of secure, privacy-focused applications of artificial intelligence. Our computer vision models process data exclusively on the device.

Let’s take a closer look at why you should prioritize on-device processing when choosing your software solutions.

What is on-device processing?

On-device processing refers to software using only the resources of the hardware it is running on. Applied to artificial intelligence, it means that the software does not need to connect to a machine learning model on an external server.

Tasks like natural language processing or image generation are computationally expensive. Specialized chipsets, however, allow running such tasks even on less powerful hardware, such as smartphones. These often include an NPU (neural processing unit), also called an AI accelerator.

Processing data on the device reduces latency, which can lead to faster results and a better user experience. It also improves privacy, as the data never leaves the device.

The role of data privacy in AI

Privacy is the key reason behind the current move toward on-device processing – or on-device intelligence, in the context of AI applications.

When large language models (LLMs) exploded onto the scene at the end of 2022, a central issue quickly emerged: What data were these models trained on? And how did they handle user input? Businesses, in particular, were eager to experiment with this new technology for their workflows, but were deterred by the intransparency around data processing.

Soon, enterprise tiers for popular AI tools emerged with the promise of not using user data to train the models. However, another problem remains: Data is traveling back and forth between the user and the service, and these transmissions can pose a security risk when not properly encrypted.

Fortunately, engineers were able to significantly reduce the size of machine learning models using techniques like neural architecture search and pruning. This eventually led to highly optimized models capable of running on mobile devices. As a result, phone manufacturers are now implementing on-device intelligence on their flagship models, and focus on privacy in their advertising.

Still, some tasks require far more resources than even phones with AI accelerators can provide. One approach is to differentiate between tasks that can be performed on the device and those that cannot. For the latter, the data is end-to-end-encrypted before it leaves the device and then transmitted to specialized, security-hardened server infrastructure. There, it is processed, sent back, and removed from the server node.

Until the next big leap in computing efficiency (which DeepSeek might herald), companies will have to live with these kinds of compromises for certain tasks.

However, there are also machine learning applications that have had plenty of time to mature and can be run on virtually any device.

Computer vision: a prime example of on-device intelligence

As a subfield of artificial intelligence, computer vision uses machine learning to extract meaningful information from visual inputs. This allows computers to recognize objects, detect patterns, and make decisions based on visual data. With research going as far back as the 1960s, this technology is now exceptionally refined and efficient. Today, it has found its way into self-driving cars, operating rooms – and a variety of mobile apps.

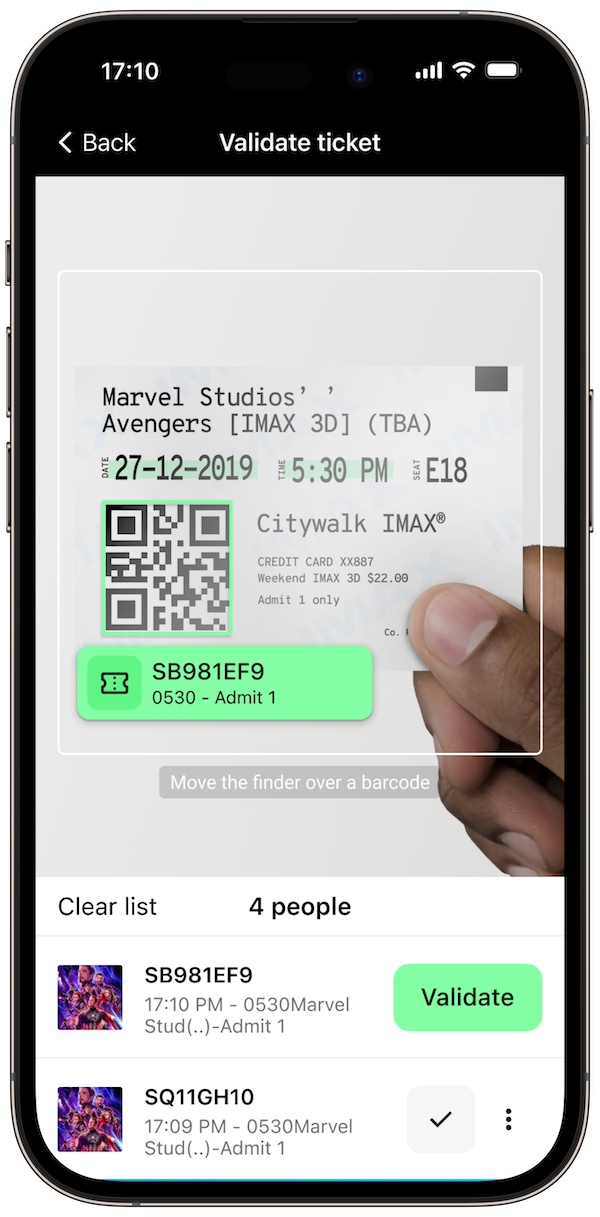

Such computer vision algorithms are at the core of the Scanbot SDK: They are what allows our customers to capture and extract information from barcodes, documents, and all kinds of structured data in seconds.

Thanks to the technology’s maturity and computational efficiency, our customers can use the SDK’s functionalities without an internet connection. The fact that all processing happens on the device is especially important for our clients in sensitive industries like healthcare, insurance, and finance.

The same client-centric philosophy also informs how we develop and distribute our solutions. We train our machine learning models in-house, and using only training data we created ourselves. Whenever we improve a model, our clients receive it in a mobile-friendly size as part of the next SDK update – ready to be run locally on any supported device.

Conclusion

As artificial intelligence becomes more sophisticated, demand for on-device data processing will only increase. Running machine learning models and the applications that use them locally increases data privacy, enhances security, and reduces latency. If you are considering leaving the cloud behind, there has never been a better time.